RoboBees Can Fly and Swim. What’s Next? Laser Vision

Swarms of robotic bees, capable of seeing, may soon be able to monitor pollution and traffic, or scan the struts of bridges

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/63/da/63da8cfe-ceb7-4ba6-9867-7eec95a1abf5/roboticinsectphoto01.jpg)

Outfitted with tiny lasers for eyes, swarms of diminutive robot drones may soon be capable of pollinating fields of crops, searching collapsed buildings for survivors or taking air quality measurements over large areas.

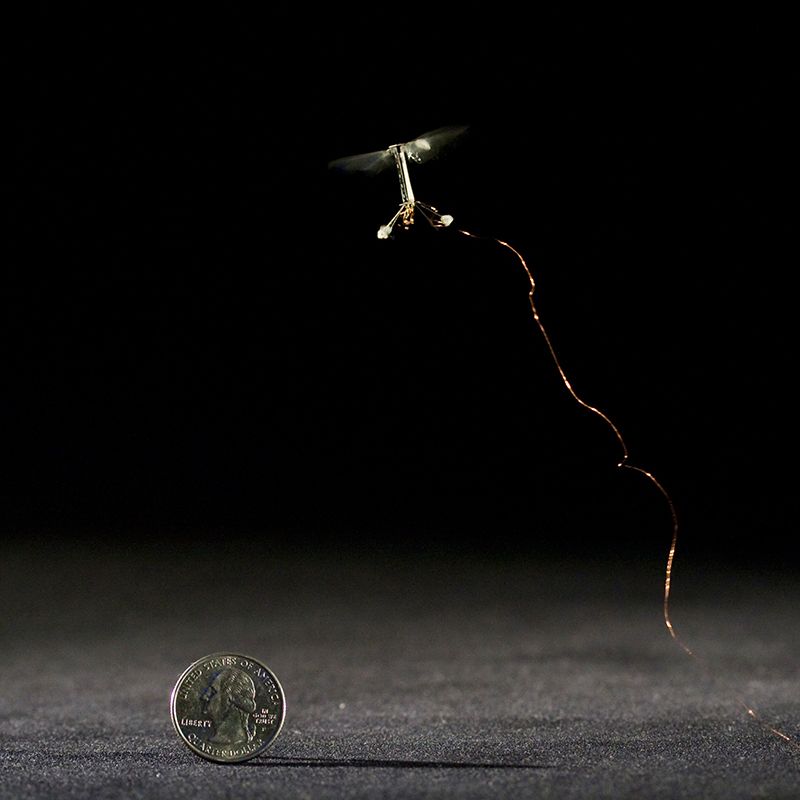

In 2012, researchers at Harvard University made headlines when they launched a robotic insect, weighing only milligrams, and watched as it successfully flew and landed; a year later, it was able to follow a pre-programmed path. Since then, the RoboBee has learned how to swim, but there’s still a big gap in its abilities: it can’t effectively see.

Researchers at the University of Buffalo and University of Florida are working to change that. Over the next three years, with the help of a $1.1 million grant from the National Science Foundation, Karthik Dantu at Buffalo and Sanjeev Koppal at Florida are testing ways to shrink the technology used in lidar, or light detection and ranging, to give the little drones the ability to navigate themselves towards a goal without being driven there by a human operator. They’d be like the Google self-driving car, only thousands of times smaller.

“We needed a depth sensor for intelligent behavior,” Koppal says. “When we were thinking of which kind of techniques we could use, lidar was at the top of the list.”

Developed in the 1960s after the invention of the laser, lidar works just like radar or sonar, but with light. By pulsing a series of invisible beams of light out into the surrounding area, lidar creates a detailed picture of the environment based on light bounced back to its sensors. Lidar can use light in the visible, ultraviolet and near-infrared wavelengths for imaging, and the shorter wavelengths make it possible to measure particles as tiny as airborne aerosols.

But the smallest commercial lidar system weighs 830 grams, or nearly two pounds, while a robotic bee is a mere 80 milligrams—lighter than a small paperclip. In other words, creating microlidar capability requires Ant-Man level shrinkage.

Conventional cameras couldn’t be used, Dantu explains, because the robots are just too small—depth perception with cameras requires they are spaced a minimum distance apart, like eyes, and there just isn’t that kind of room on the drone. Capturing and analyzing light beams to perceive distance and depth was the logical path, as it relies on collecting light from any direction. Plus, cameras and image processing consume a great deal of power, which is also at a premium on the RoboBees. Around 97 percent of the total power budget onboard a robot bee is consumed by flight; computing and sensing systems get to fight with other systems for the leftovers.

With the grant, Koppal is designing new lightweight sensors, and Dantu is working on mathematical algorithms to help those sensors best use the data they collect. A colleague of Koppal’s at Florida, Huikai Xie, is working on building the necessary laser emitters.

First, the researchers will use a mirror with wide-angle optics on the drone to collect laser pulses from a remote lidar base station, and fine-tune the proper algorithm for the sensors with that data. The second step is to mount a laser diode on the drone itself, powered via tether to a base station or battery. From there, the ultimate goal is to get it all internally powered.

Microlidar could be used in endoscopic probes, the wand-like tools used during surgery that currently employ ultrasound to visualize internal organs and body structures. An entire swarm of robotic bees could monitor air pollution, weather or traffic patterns over a large area. Any discipline that currently employs lidar could potentially benefit, including topographic mapping, detection of seismic faults, identification of undiscovered mineral deposits, architectural planning and sewer maintenance.

Though Dantu and Koppal are focusing on getting a viable lidar system built for the drone, just how the data will be gathered and processed is a hurdle they discuss often. The bee or swarm of bees could do some portion of the data processing on their own, as well as collectively transmitting data via coded pulses of light to a base station for in-depth computing.

Michael Olsen, an associate professor of geomatics at Oregon State University, works with lidar to study topography and terrain mapping, using mainly ground-based scanners to look at coastal erosion, safety of bridges and earthquake engineering. He says the lack of ability to collect a complete data set is one big constraint with conventional lidar systems.

“We inevitably have gaps in our data due to line of sight constraints,” Olsen says. “These RoboBees would potentially be very useful for helping to fill in some of these gaps to produce a more complete model. The downsizing of an active laser system, such as lidar, is quite a challenge, and what the researchers are tackling here is a whole new scale. It sounds like they have come up with some very interesting solutions to power, weight and size constraints.”

Fully realized, a swarm of microlidar-equipped bee drones could fly around trees in a dense forest to better capture the structure of each tree, or up underneath the struts of a bridge, scans difficult to make with conventional techniques.

While lidar is currently used for research and industrial applications, microlidar could have many home-based or medical uses. House hunters could have access to a full 3D rendering of a home for sale and know the exact dimensions of rooms to plan how furniture might fit. Search and rescue missions could comb through small spaces within collapsed structures. Home-based systems could detect whether something is out of place or missing, or the degree to which the earth has shifted after a landslide or earthquake. And bodybuilders or weight-loss seekers could get regular and detailed scans of their bodies to know the extent of their progress.

Dantu and Koppal admit that these sorts of applications are still many years in the future, but that the practical nature of the technology is promising.

“If you can do something on the RoboBee, you can do it anywhere,” Koppal says. “Microlidar could work wherever regular lidar is used. There are all kinds of applications in agriculture and industry where people already use lidar to map the factory floor or farm. In many cases, smaller and cheaper is just better.”

And remember, these lasers aren’t high-powered zappers. RoboBees won’t be using them to divide and conquer—only to get a more accurate view of the world around them.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Michelle-Donahue.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Michelle-Donahue.jpg)