How Artificial Intelligence Could Revolutionize Archival Museum Research

A new study shows off a computer program’s specimen-sorting prowess

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/34/e6/34e64b7f-f82b-4224-9530-911ee7ceff59/deeplearning1_iva_kostadinova.jpg)

When you think of artificial intelligence, the field of botany probably isn't uppermost in your mind. When you picture settings for cutting-edge computational research, century-old museums may not top the list. And yet, a just-published article in the Biodiversity Data Journal shows that some of the most exciting and portentous innovation in machine learning is taking place at none other than the National Herbarium of the National Museum of Natural History in Washington, D.C.

The paper, which demonstrates that digital neural networks are capable of distinguishing between two similar families of plants with rates of accuracy well over 90 percent, implies all sorts of mouth-watering possibilities for scientists and academics going forward. The study relies on software grounded in “deep learning” algorithms, which allow computer programs to accrue experience in much the same way human experts do, upping their game each time they run. Soon, this tech could enable comparative analyses of millions of distinct specimens from all corners of the globe—a proposition which would previously have demanded an untenable amount of human labor.

“This direction of research shows a great deal of promise,” says Stanford professor Mark Algee-Hewitt, a prominent voice in the digital humanities movement and assistant faculty director at the university's Center for Spatial and Textual Analysis. “These methods have the ability to give us vast amounts of information about what collections contain,” he says, and “in doing so they make this data accessible.”

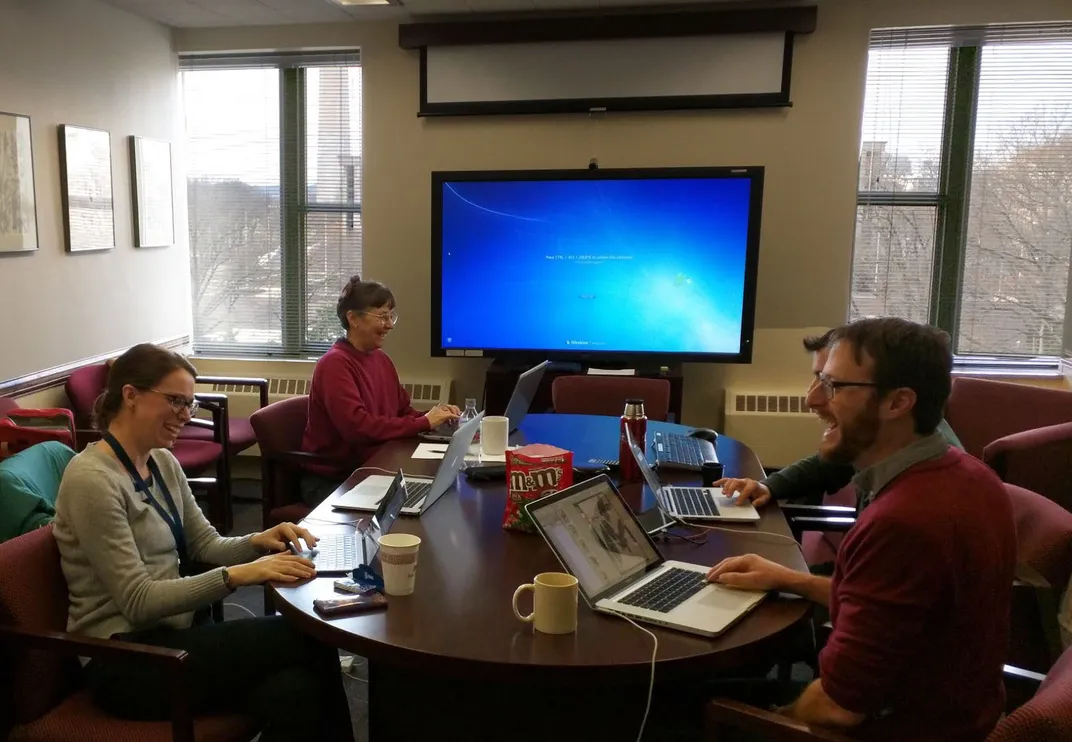

These new findings build on years of work undertaken at the Smithsonian Institution to systematically digitize its collections for academic and public access online, and represent a remarkable interdisciplinary meeting of minds: botanists, digitization experts and data scientists all had a part to play in bringing these results to light.

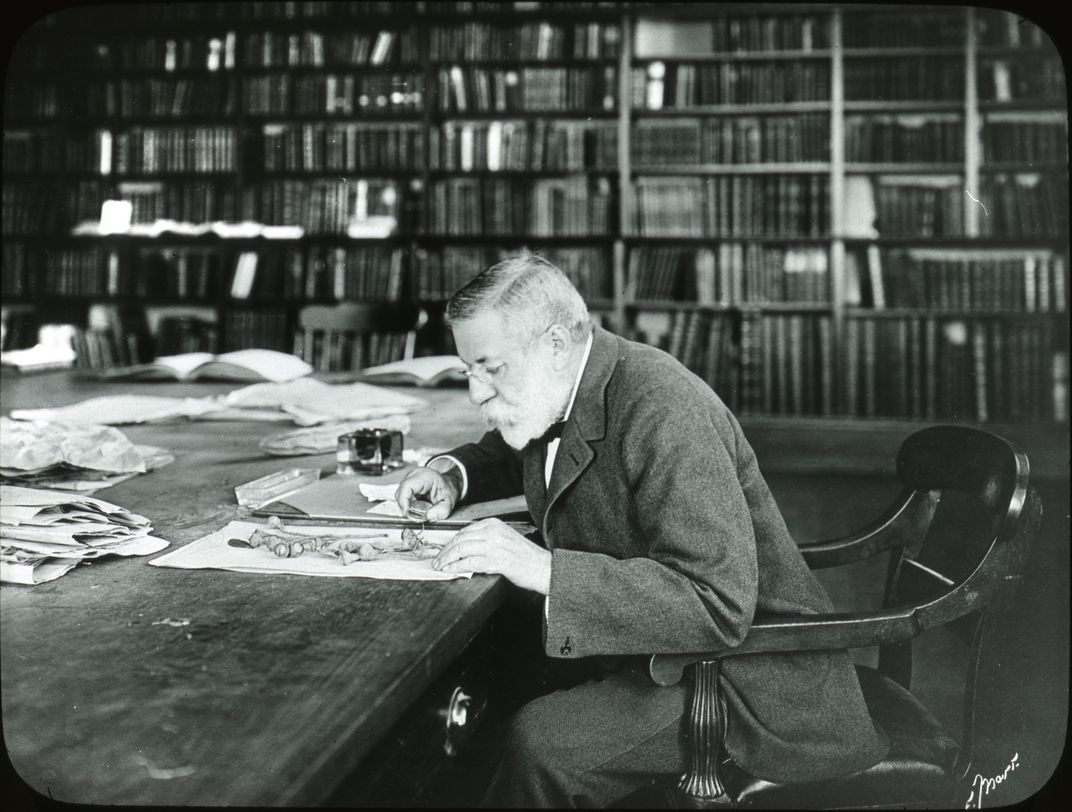

The story starts in October 2015, when the installation of a camera-and-conveyor belt apparatus beneath the Natural History Museum vastly simplified efforts to digitize the Smithsonian’s botanical collection. Instead of having to manually scan every pressed flower and clump of grass in their repository, workers could now queue up whole arrays of samples, let the belt work its magic, and retrieve and re-catalogue them at the tail end. A three-person crew has overseen the belt since its debut, and they go through some 750,000 specimens each year. Before long, the Smithsonian's herbarium inventory, five million specimens strong, will be entirely online.

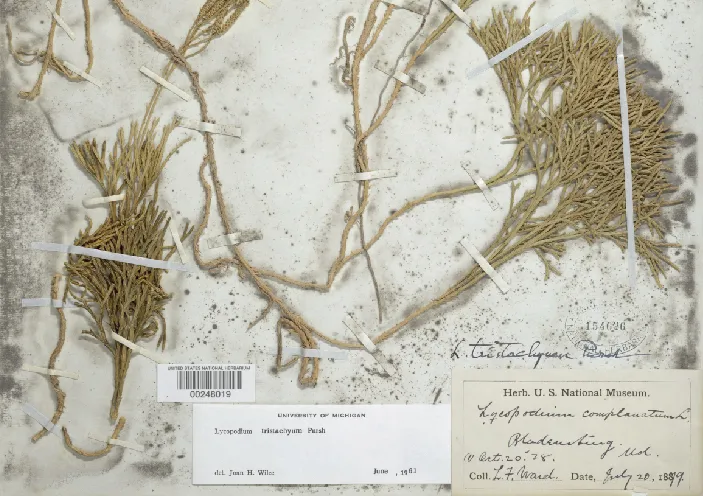

Each specimen is tagged with a thorough identification card, which provides information on its provenance as well as essential statistical data. The contents of these cards have been transcribed and uploaded alongside the digital images, providing a comprehensive view of each item in the collection for those with the inclination to go searching.

“It makes our collection accessible to anyone who has a computer and an internet connection,” says museum botany chair Laurence Dorr, “which is great for answering certain questions.” Even so, Dorr found he couldn't shake a feeling of untapped potential. Sure, massive amounts of specimen data were now available to the online community, but analyzing it in the aggregate remained fanciful. Looking up particular specimens and small categories of specimens was easy enough, but Dorr wondered if there existed a way to leverage the data to draw conclusions about thousands of specimens. “What can you do with this data?” he recalls wondering. A man named Adam Metallo soon provided a compelling answer.

Metallo, an officer with the Smithsonian’s Digitization Program Office, had attended a conference at which the tech giant NVIDIA—darling of PC gamers everywhere—was showcasing next-generation graphics processing units, or GPUs. Metallo was there looking for ways to improve upon the Smithsonian’s 3D digital rendering capabilities, but it was a largely unrelated nugget of information that caught his attention and stuck with him. In addition to generating dynamic, high-fidelity 3D visuals, he was told, NVIDIA’s GPUs were well-suited to big data analytics. In particular, beefed-up GPUs were just what was needed for intensive digital pattern recognition; many a machine learning algorithm had been optimized for the NVIDIA platform.

Metallo was instantly intrigued. This “deep learning” technology, already deployed in niche sectors like self-driving car development and medical radiology, held great potential for the world of museums—which, as Metallo points out, constitutes “the biggest and oldest dataset that we now have access to.”

“What does it mean for the big datasets we’re creating at the Smithsonian through digitization?” Metallo wanted to know. His question perfectly mirrored that of Laurence Dorr, and once the two connected, sparks began to fly. “The botany collection was one of the biggest collections we had most recently been working on,” Metallo remembers. A collaboration suggested itself.

Whereas many forms of machine learning demand that researchers flag key mathematical markers in the images to be analyzed—a painstaking process that amounts to holding the computer’s hand—modern-day deep learning algorithms can teach themselves which markers to look for on the job, saving time and opening the door to larger-scale inquiries. Nevertheless, writing a Smithsonian-specific deep learning program and calibrating it for discrete botanical research questions was a tricky business—Dorr and Metallo needed the help of data scientists to make their vision a reality.

One of the specialists they brought aboard was Smithsonian research data scientist Paul Frandsen, who immediately recognized the potential in creating an NVIDIA GPU-powered neural network to bring to bear on the botany collection. For Frandsen, this project symbolized a key first step down a wonderful and unexplored path. Soon, he says, “we’re going to start looking for morphological patterns on a global scale, and we’ll be able to answer these really big questions that would traditionally have taken thousands or millions of human-hours looking through the literature and classifying things. We’re going to be able to use algorithms to help us find those patterns and learn more about the world.”

The just-published findings are a striking proof of concept. Generated by a team of nine headed up by research botanist Eric Schuettpelz and data scientists Paul Frandsen and Rebecca Dikow, the study aims to answer two large-scale questions about machine learning and the herbarium. The first is how effective a trained neural network can be at sorting mercury-stained specimens from unsullied ones. The second, the highlight of the paper, is how effective such a network can be at differentiating members of two superficially similar families of plants—namely, the fern ally families Lycopodiaceae and Selaginellaceae.

The first trial required that the team go through thousands of specimens themselves in advance, noting definitively which ones were visibly contaminated with mercury (a vestige of outdated botanical preservation techniques). They wanted to be sure they knew with 100 percent certainty which were stained and which weren’t—otherwise, assessing the accuracy of the program would not be possible. The team cherry-picked nearly 8,000 images of clean samples and 8,000 more of stained samples with which to train and test the computer. By the time they finished tweaking the neural network parameters and withdrew all human assistance, the algorithm was categorizing specimens it had never seen before with 90 percent accuracy. If the most ambiguous specimens—e.g., those in which staining was minimal and/or very faint—were thrown out, that figure rose to 94 percent.

This result implies that deep learning software could soon help botanists and other scientists avoid wasting time on tedious sorting tasks. “The problem isn’t that a human can’t determine whether or not a specimen is stained with mercury,” Metallo clarifies, but rather that “it’s difficult to manually sort through and figure out where the contamination exists,” and not sensible to do so from a time management standpoint. Happily, machine learning could turn a major time sink into at most a few days of rapid automated analysis.

The species discrimination portion of the study is even more exciting. Researchers trained and tested the neural network with roughly 9,300 clubmoss and 9,100 spikemoss samples. As with the staining experiment, about 70 percent of these samples were used for initial calibration, 20 percent were used for refinement, and the final 10 percent were used to formally assess accuracy. Once the code was optimized, the computer’s rate of success at distinguishing between the two families was 96 percent—and a nearly perfect 99 percent if the trickiest samples were omitted.

One day, Frandsen speculates, programs like this could handle preliminary specimen categorization at museums across the globe. “In no way do I think these algorithms will do anything to replace curators,” he is quick to note, “but instead, I think they can help curators and people involved in systematics to be more productive, so they can do their work much more quickly.”

The neural network’s success in this study also paves the way for rapid testing of scientific hypotheses across massive collections. Dorr sees in the team’s findings the possibility of conducting extensive morphological comparisons of digitized samples—comparisons which could lead to significant scientific breakthroughs.

This is not to say that deep learning will be a silver bullet in research across the board. Stanford's Mark Algee-Hewitt points out that “it is almost impossible to reconstruct why and how a neural network makes its decisions” once it has been conditioned; determinations left to computer programs should always be uncomplicated and verifiable in nature if they are to be trusted.

“Obviously,” says Dorr, an autonomous computer program’s “not going to test for genetic relationships, things like that”—at least anytime in the near future. “But we can start to learn about distribution of characteristics by geographic region, or by taxonomic unit. And that’s going to be really powerful.”

More than anything, this research is a jumping-off point. It is clear now that deep learning technology holds great promise for scientists and other academics all over the world, as well as the curious public for which they produce knowledge. What remains is rigorous follow-up work.

“This is a small step,” says Frandsen, “but it’s a step that really tells us that these techniques can work on digitized museum specimens. We’re excited about setting up several more projects in the next few months, to try to test its limits a bit more.”

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/DSC_02399_copy.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/DSC_02399_copy.jpg)