“New” Rembrandt Created, 347 Years After the Dutch Master’s Death

The painting was created using data from more than 168,000 fragments of Rembrandt’s work

Art history is plagued with questions. What if Vincent van Gogh had lived to paint another wheat field? What if Leonardo da Vinci had roped Mona Lisa into another portrait? What would another painting by Rembrandt look like? Usually, those intriguing inquiries go unanswered—but new technology just revealed a possible answer to that final query.

A “new” painting by Rembrandt was just revealed in the Netherlands, bringing the master’s talent for portraying light and shadow back to life. Don’t worry, the project didn’t involve reanimating the master's lifeless corpse—that’s still resting somewhere beneath Amsterdam’s Westerkerk. Rather, it used Rembrandt’s other paintings as the basis for an ambitious project that combines art and today’s most impressive technology.

The painting, which is being billed as “The Next Rembrandt,” was created using data from more than 168,000 fragments of Rembrandt’s work. Over the course of 18 months, a group of engineers, Rembrandt experts and data scientists analyzed 346 of Rembrandt’s works, then trained a deep learning engine to “paint” in the master’s signature style.

In order to stay true to Rembrandt’s art, the team decided to flex the engine’s muscles on a portrait. They analyzed the demographics of the people Rembrandt painted over his lifetime and determined that it should paint a Caucasian male between 30 and 40 years of age, complete with black clothes, a white collar and hat, and facial hair.

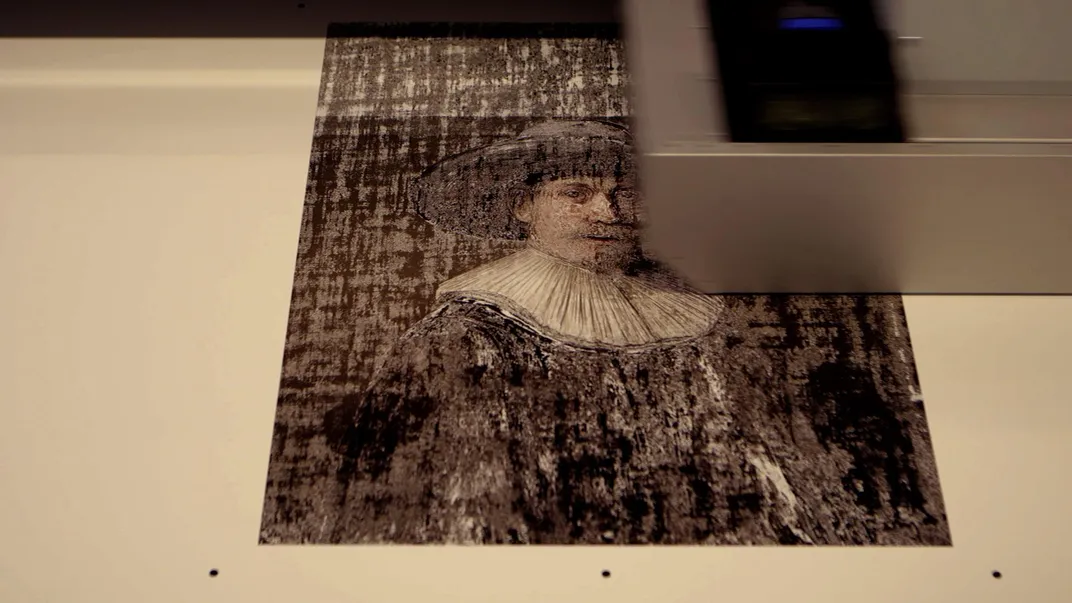

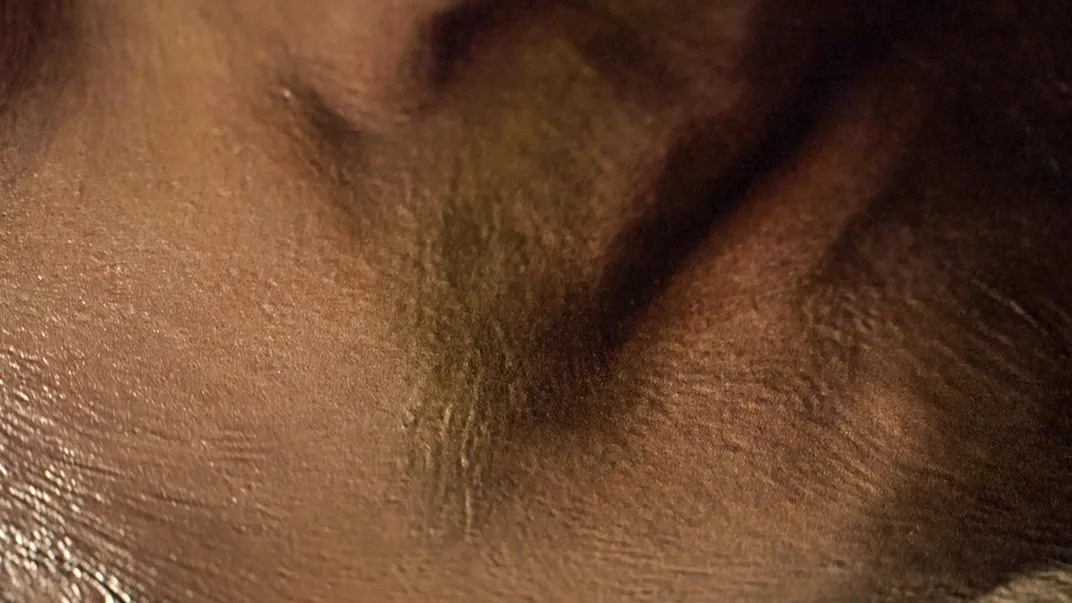

Using what it knew about Rembrandt’s style and his use of everything from geometry to paints, the machine then generated a 2D work of art that could be by the Dutch painter himself. But things didn’t end there—the team then used 3D scans of the heights of Rembrandt’s paintings to mimic his brushstrokes. Using a 3D printer and the heigh map, they printed 13 layers of pigments. The final result—all 148 million pixels of it—looks so much like a painting by Rembrandt during his lifetime that you’d be forgiven if you walked right by it in a collection of his work.

Though the painting won’t be on display until a later date, it’s sure to draw curious crowds once it’s shown to the public. That’s precisely the point. A release explains that the piece is “intended to fuel the conversation about the relationship between art and algorithms, between data and human design and between technology and emotion.”

But does it belong on the walls of a museum? Images created by Google’s Deep Dream neural network, which creates the trippy imagery the company calls “Inceptionism,” has already been sold at galleries and displayed at art exhibitions. The Rembrandt project takes that idea a step further by spinning off pieces based on a human’s collected output—an idea that could lead to the resurrection of other beloved artists.

If a painting is ultimately generated by a machine and spit out by a printer, does it contain the soul of the person whose data seeded it? Who should get credit for the image—Rembrandt or the team of engineers and art historians who helped create it? Is it art, or just a fun experiment? Sure, the painting may tell Rembrandt fans what his next piece of art may have looked like, but like the best art, it leaves behind more questions than it answers.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/erin.png)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/erin.png)