Inside Professor Nanayakkara’s Futuristic Augmented Human Lab

An engineer at the University of Auckland asks an important question: What can seamless human-computer interfaces do for humanity?

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/3f/3f/3f3fc083-eead-40fe-8960-90f1f63b970b/augmented_human_lab.jpg)

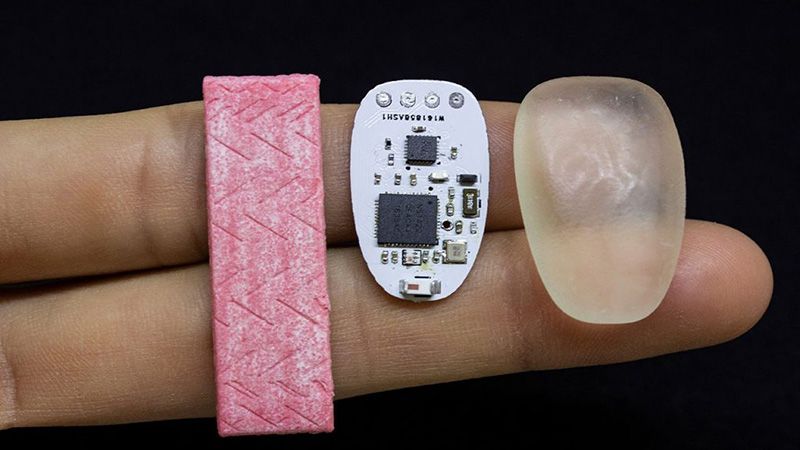

As user interfaces go, tonguing a soft, Bluetooth-enabled clicker the size of a wad of gum is one of the odder ways to select, or move, or click, or otherwise control a computer. But for certain situations, it actually makes a lot of sense. Say you’re riding a bike, and want to answer a call on your headset, or look up directions, but don’t want to take your hands off the bars. Or if you’re paralyzed, and need to drive an electric wheelchair, an unobtrusive directional pad in your mouth will be far less noticeable than a standard mouth or chin control device, or even one you press with your shoulder.

“How can we reproduce these interactions while maintaining the discreetness of the interface?” says Pablo Gallego, one of the inventors of the device, called ChewIt. “People cannot tell if you are interacting with ChewIt, or if you have chewing gum or a gummy inside the mouth. Or maybe a caramel.”

Gallego settled on this idea, determined to refine it and create a prototype in pursuit of his master’s degree in engineering at New Zealand’s University of Auckland. Research showed humans can recognize distinct shapes in their mouths, much like with fingertips. And he knew we can tolerate gum and other foreign objects. What followed was years of work, optimizing the form factor. A round object wouldn’t work; the user couldn’t tell how it was oriented. It had to be large enough to control, but small enough to tuck away in the cheek. Together with research fellow Denys Matthies, Gallego made ChewIt out of an asymmetric blob of polymer resin that contained a circuit board with a button that can control and move a chair.

Gallego and Matthies conceived and built ChewIt at the University of Auckland’s Augmented Human Lab, a research group engineering professor Suranga Nanayakkara assembled to invent tools designed to adapt technology for human use, rather than the other way around. There’s a mismatch, Nanayakkara reasoned, between what our technology does and how it interfaces with us. We shouldn’t have to learn it; it should learn us.

“Powerful technology, poorly designed, will make users feel disabled,” says Nanayakkara. “Powerful technology with the right man-machine interface will make people feel empowered, and that will make the human-to-human interaction in the foreground, [and] keep the technology in the background. It helps harness the full potential of technology.”

Nanayakkara has gone out of his way to ensure students and scientists in his prolific lab are enabled to create based on their interests, and collaborate with each other on their ideas. The variety of technologies they’ve developed is remarkable. There’s a welcome mat that recognizes residents based on their footprint, including the wearer’s weight and the wear profiles of the soles, and unlocks the door for them. There’s a personal memory coach that engages via audio at times when it recognizes the user has the time and attention to practice. There’s a smart cricket bat that helps users practice their grip and swing. There’s a step detector for walking aids for the elderly, because the FitBits and smartwatches often miscount steps when people are using rollers.

And there’s GymSoles. These smart insoles act like a weightlifting coach, helping wearers maintain correct form and posture during squats and deadlifts. “These have very distinct postures,” says Samitha Elvitigala, who is building the device as part of his PhD candidacy. “There are some subtle movements that you have to follow, otherwise you will end up with injuries.” Sensors in the soles track the pressure profile of the feet, calculate the center of pressure, and compare it to the pattern that it should be — say, whether the weightlifter is leaning too far back, or too far forward. Then the device provides haptic feedback in the form of subtle vibrations, indicating how the lifter should align herself. By adjusting her tilt and the positioning of her feet and legs and hips properly, the whole body falls into the appropriate form. Elvitigala is still refining the project, and looking at how it could be used for other applications, like improving balance in Parkinson’s patients or stroke victims.

The origin of the Augmented Human Lab goes all the way back to an experience Nanayakkara had in high school. Working with students at a residential school for the deaf, he realized that everyone but him was communicating seamlessly. It made him rethink communication and abilities. “It’s not always about fixing disability, it’s about connecting with people,” he says. “I felt I needed something to be connected with them.” Later, he noticed a similar problem in communicating with computers.

He learned to think about it as a design problem while studying engineering, and then as a postdoc in computer scientist Pattie Maes’ Fluid Interfaces group, part of the MIT Media Lab. Like the Augmented Human Lab, the Fluid Interfaces group builds devices designed to enhance cognitive ability via seamless computer interfaces.

“Devices play a role in our lives, and at the moment their impact is very negative, on our physical well-being, our social well-being,” says Maes. “We need to find ways to integrate devices better into our physical lives, our social lives, so that they are less disruptive and have less negative effects.”

The goal, says Maes, is not to get computers to do everything for us. We’ll be better off if they can teach us to better do things ourselves, and assist us as we do. For example, her students designed a pair of glasses that track wearers’ eye movements and EEG, and remind them to focus on a lecture or a reading when their attention is flagging. Another uses augmented reality to help users map memories onto streets as they walk, a spatial memorization technique that memory champions refer to as a “memory palace.” Compare that to Google (maybe you search “Halloween costumes” instead of getting creative, says Maes) or Google Maps, which have largely replaced our need to retain information or understand where we are.

“We often forget that when we use some service like this, that augments us, there’s always a cost,” she says. “A lot of the devices and the systems that we build sort of augment a person with certain functions. But whenever you augment some task or ability, you also sometimes lose a little bit of that ability.”

Perhaps Nanayakkara’s best-known device, the FingerReader, began in his time at MIT. Designed for the visually impaired, FingerReader is simple in its interface—point the ring-borne camera at something, click, and the device will tell you what it is, or read whatever text is on it, through a set of headphones.

FingerReader followed Nanayakkara to Singapore, where he first started the Augmented Human Lab at the Singapore University of Technology and Design, and then to the University of Auckland, where he moved his team of 15 in March 2018.* In that time, he and his students have refined FingerReader and made subsequent versions. Like many of the other devices, FingerReader is patented (provisionally), and could one day find its way to the market. (Nanayakkara founded a startup called ZuZu Labs to produce the device, and is producing a test run of a few hundred pieces.)

In some ways, the expansion of virtual assistants like Siri, Alexa and Google Assistant are tackling similar problems. They allow a more natural interface, more natural communication between people and their ubiquitous computers. But to Nanayakkara, they don’t obviate his devices, they just offer a new tool to complement them.

“These enabling tech are great, they need to happen, it’s how the field advances,” he says. “But somebody has to think about how to best harness the full power of those. How can I leverage this to create the next most exciting man-machine interaction?”

*Editor's Note, April 15, 2019: A previous version of this article incorrectly stated that Suranga Nanayakkara moved his team from the Singapore University of Technology and Design to the University of Auckland in May 2018, when in fact, it was in March 2018. The story has been edited to correct that fact.