What Happens When Artificial Intelligence Turns On Us?

In a new book, James Barrat warns that artificial intelligence will one day outsmart humans, and there is no guarantee that it will be benevolent

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/03/e4/03e4e1cc-59c4-49c9-8f2e-9e044657ab6a/terminator.jpg)

Artificial intelligence has come a long way since R2-D2. These days, most millennials would be lost without smart GPS systems. Robots are already navigating battlefields, and drones may soon be delivering Amazon packages to our doorsteps.

Siri can solve complicated equations and tell you how to cook rice. She has even proven she can even respond to questions with a sense of humor.

But all of these advances depend on a user giving the A.I. direction. What would happen if GPS units decided they didn’t want to go to the dry cleaners, or worse, Siri decided she could become smarter without you around?

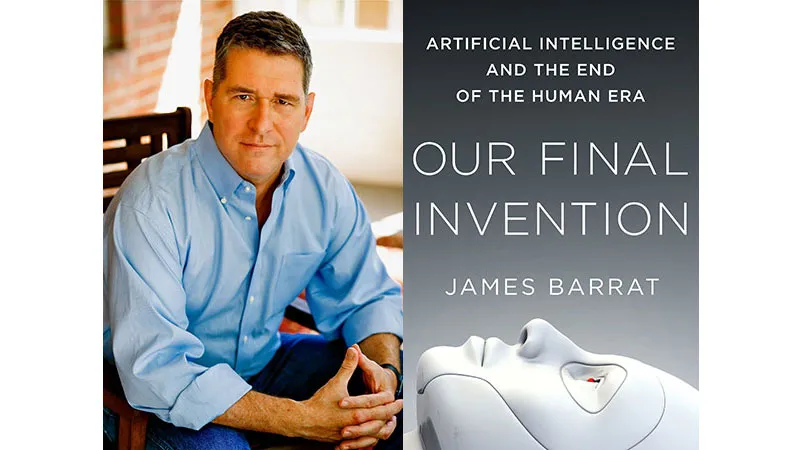

These are just the tamest of outcomes James Barrat, an author and documentary filmmaker, forecasts in his new book, Our Final Invention: Artificial Intelligence and the End of the Human Era.

Before long, Barrat says, artificial intelligence—from Siri to drones and data mining systems—will stop looking to humans for upgrades and start seeking improvements on their own. And unlike the R2-D2s and HALs of science fiction, the A.I. of our future won’t necessarily be friendly, he says: they could actually be what destroy us.

In a nutshell, can you explain your big idea?

In this century, scientists will create machines with intelligence that equals and then surpasses our own. But before we share the planet with super-intelligent machines, we must develop a science for understanding them. Otherwise, they’ll take control. And no, this isn’t science fiction.

Scientists have already created machines that are better than humans at chess, Jeopardy!, navigation, data mining, search, theorem proving and countless other tasks. Eventually, machines will be created that are better than humans at A.I. research

At that point, they will be able to improve their own capabilities very quickly. These self-improving machines will pursue the goals they’re created with, whether they be space exploration, playing chess or picking stocks. To succeed they’ll seek and expend resources, be it energy or money. They’ll seek to avoid the failure modes, like being switched off or unplugged. In short, they’ll develop drives, including self-protection and resource acquisition—drives much like our own. They won’t hesitate to beg, borrow, steal and worse to get what they need.

How did you get interested in this topic?

I’m a documentary filmmaker. In 2000, I interviewed inventor Ray Kurzweil, roboticist Rodney Brooks and sci-fi legend Arthur C. Clarke for a TLC film about the making of the novel and film, 2001: A Space Odyssey. The interviews explored the idea of the Hal 9000, and malevolent computers. Kurzweil’s books have portrayed the A.I. future as a rapturous “singularity,” a period in which technological advances outpace humans’ ability to understand them. Yet he anticipated only good things emerging from A.I. that is strong enough to match and then surpass human intelligence. He predicts that we’ll be able to reprogram the cells of our bodies to defeat disease and aging. We’ll develop super endurance with nanobots that deliver more oxygen than red blood cells. We’ll supercharge our brains with computer implants so that we’ll become superintelligent. And we’ll port our brains to a more durable medium than our present “wetware” and live forever if we want to. Brooks was optimistic, insisting that A.I.-enhanced robots would be allies, not threats.

Scientist-turned-author Clarke, on the other hand, was pessimistic. He told me intelligence will win out, and humans would likely compete for survival with super-intelligent machines. He wasn’t specific about what would happen when we share the planet with super-intelligent machines, but he felt it’d be a struggle for mankind that we wouldn’t win.

That went against everything I had thought about A.I., so I began interviewing artificial intelligence experts.

What evidence do you have to support your idea?

Advanced artificial intelligence is a dual-use technology, like nuclear fission, capable of great good or great harm. We’re just starting to see the harm.

The NSA privacy scandal came about because the NSA developed very sophisticated data-mining tools. The agency used its power to plumb the metadata of millions of phone calls and the the entirety of the Internet—critically, all email. Seduced by the power of data-mining A.I., an agency entrusted to protect the Constitution instead abused it. They developed tools too powerful for them to use responsibly.

Today, another ethical battle is brewing about making fully autonomous killer drones and battlefield robots powered by advanced A.I.—human-killers without humans in the loop. It’s brewing between the Department of Defense and the drone and robot makers who are paid by the DOD, and people who think it’s foolhardy and immoral to create intelligent killing machines. Those in favor of autonomous drones and battlefield robots argue that they’ll be more moral—that is, less emotional, will target better and be more disciplined than human operators. Those against taking humans out of the loop are looking at drones’ miserable history of killing civilians, and involvement in extralegal assassinations. Who shoulders the moral culpability when a robot kills? The robot makers, the robot users, or no one? Nevermind the technical hurdles of telling friend from foe.

In the longer term, as experts in my book argue, A.I. approaching human-level intelligence won’t be easily controlled; unfortunately, super-intelligence doesn’t imply benevolence. As A.I. theorist Eliezer Yudkowsky of MIRI [the Machine Intelligence Research Institute] puts it, “The A.I. does not love you, nor does it hate you, but you are made of atoms it can use for something else.” If ethics can’t be built into a machine, then we’ll be creating super-intelligent psychopaths, creatures without moral compasses, and we won’t be their masters for long.

What is new about your thinking?

Individuals and groups as diverse as American computer scientist Bill Joy and MIRI have long warned that we have much to fear from machines whose intelligence eclipses our own. In Our Final Invention, I argue that A.I. will also be misused on the development path to human-level intelligence. Between today and the day when scientists create human-level intelligence, we’ll have A.I.-related mistakes and criminal applications.

Why hasn’t more been done, or, what is being done to stop AI from turning on us?

There’s not one reason, but many. Some experts don’t believe we’re close enough to creating human-level artificial intelligence and beyond to worry about its risks. Many A.I. makers win contracts with the Defense Advanced Research Projects Agency [DARPA] and don’t want to raise issues they consider political. The normalcy bias is a cognitive bias that prevents people from reacting to disasters and disasters in the making—that’s definitely part of it. But a lot of A.I. makers are doing something. Check out the scientists who advise MIRI. And, a lot more will get involved once the dangers of advanced A.I. enter mainstream dialogue.

Can you describe a moment when you knew this was big?

We humans steer the future not because we’re the fastest or the strongest creatures on the planet, but because we’re the smartest. When we share the planet with creatures smarter than ourselves, they’ll steer the future. When I understood this idea, I felt I was writing about the most important question of our time.

Every big thinker has predecessors whose work was crucial to his discovery. Who gave you the foundation to build your idea?

The foundations of A.I. risk analysis were developed by mathematician I. J. Good, science fiction writer Vernor Vinge, and others including A.I. developer Steve Omohundro. Today, MIRI and Oxford’s Future of Humanity Institute are almost alone in addressing this problem. Our Final Invention has about 30 pages of endnotes acknowledging these thinkers.

In researching and developing your idea, what has been the high point? And the low point?

The high points were writing Our Final Invention, and my ongoing dialogue with A.I. makers and theorists. People who program A.I. are aware of the safety issues and want to help come up with safeguards. For instance, MIRI is working on creating “friendly” A.I.

Computer scientist and theorist Steve Omohundro has advocated a “scaffolding” approach, in which provably safe A.I. helps build the next generation of A.I. to ensure that it too is safe. Then that A.I. does the same, and so on. I think a public-private partnership has to be created to bring A.I.-makers together to share ideas about security—something like the International Atomic Energy Agency, but in partnership with corporations. The low points? Realizing that the best, most advanced A.I. technology will be used to create weapons. And those weapons eventually will turn against us.

What two or three people are most likely to try to refute your argument? Why?

Inventor Ray Kurzweil is the chief apologist for advanced technologies. In my two interviews with him, he claimed that we would meld with the A.I. technologies through cognitive enhancements. Kurzweil and people broadly called transhumanists and singularitarians think A.I. and ultimately artificial general intelligence and beyond will evolve with us. For instance, computer implants will enhance our brains’ speed and overall capabilities. Eventually, we’ll develop the technology to transport our intelligence and consciousness into computers. Then super-intelligence will be at least partly human, which in theory would ensure super-intelligence was “safe.”

For many reasons, I’m not a fan of this point of view. Trouble is, we humans aren’t reliably safe, and it seems unlikely that super-intelligent humans will be either. We have no idea what happens to a human’s ethics after their intelligence is boosted. We have a biological basis for aggression that machines lack. Super-intelligence could very well be an aggression multiplier.

Who will be most affected by this idea?

Everyone on the planet has much to fear from the unregulated development of super-intelligent machines. An intelligence race is going on right now. Achieving A.G.I. is job number one for Google, IBM and many smaller companies like Vicarious and Deep Thought, as well as DARPA, the NSA and governments and companies abroad. Profit is the main motivation for that race. Imagine one likely goal: a virtual human brain at the price of a computer. It would be the most lucrative commodity in history. Imagine banks of thousands of PhD quality brains working 24/7 on pharmaceutical development, cancer research, weapons development and much more. Who wouldn’t want to buy that technology?

Meanwhile, 56 nations are developing battlefield robots, and the drive is to make them, and drones, autonomous. They will be machines that kill, unsupervised by humans. Impoverished nations will be hurt most by autonomous drones and battlefield robots. Initially, only rich countries will be able to afford autonomous kill bots, so rich nations will wield these weapons against human soldiers from impoverished nations.

How might it change life, as we know it?

Imagine: in as little as a decade, a half-dozen companies and nations field computers that rival or surpass human intelligence. Imagine what happens when those computers become expert at programming smart computers. Soon we’ll be sharing the planet with machines thousands or millions of times more intelligent than we are. And, all the while, each generation of this technology will be weaponized. Unregulated, it will be catastrophic.

What questions are left unanswered?

Solutions. The obvious solution would be to give the machines a moral sense that makes them value human life and property. But programming ethics into a machine turns out to be extremely hard. Moral norms differ from culture to culture, they change over time, and they’re contextual. If we humans can’t agree on when life begins, how can we tell a machine to protect life? Do we really want to be safe, or do we really want to be free? We can debate it all day and not reach a consensus, so how can we possibly program it?

We also, as I mentioned earlier, need to get A.I. developers together. In the 1970s, recombinant DNA researchers decided to suspend research and get together for a conference at Asilomar in Pacific Grove, California. They developed basic safety protocols like “don’t track the DNA out on your shoes,” for fear of contaminating the environment with genetic works in progress. Because of the “Asilomar Guidelines,” the world benefits from genetically modified crops, and gene therapy looks promising. So far as we know, accidents were avoided. It’s time for an Asilomar Conference for A.I.

What’s standing in the way?

A huge economic wind propels the development of advanced A.I. Human-level intelligence at the price of a computer will be the hottest commodity in history. Google and IBM won’t want to share their secrets with the public or competitors. The Department of Defense won’t want to open their labs to China and Israel, and vice-versa. Public awareness has to push policy towards openness and public-private partnerships designed to ensure safety.

What is next for you?

I’m a documentary filmmaker, so of course I’m thinking about a film version of Our Final Invention.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/erica-hendry-240.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/erica-hendry-240.jpg)